First of all, I would like to thank everyone who made this possible, the trainers, speakers, sponsor (Kandji) and more particularly Patrick Wardle and Andy Rozenberg that organized this! ❤️

What’s #OFTW v3.0?#

OBFTW v3.0 is a multi-day, in-person event that aims to help students interested in Apple security by offering them training and talks from some of the world’s top Apple security researchers. #OFTW v3.0 was held in London on 24-25 July 2025!

The first day focused on trainings, while the second day featured talks.

OFTW is an invite-only event, so interested students must apply and briefly describe their background and motivations. Selected attendees receive full access to the trainings and talks. The event is free of charge but you need to cover the cost of travel and lodging. That’s what I did as I took a plane from Paris to London and it definetly worth it!

OFTW is organised by the Objective-See Foundation, a non-profit foundation that creates free open-source macOS security tools, book series and conferences like the well known OBTS. The foundation is led by Patrick Wardle, a well-known Mac malware researcher and Andy Rozenberg.

If you would like to attend such events too, you should really considere it and apply for the next version. Even if you still have to pay for transportation and hotel you should really considere it.

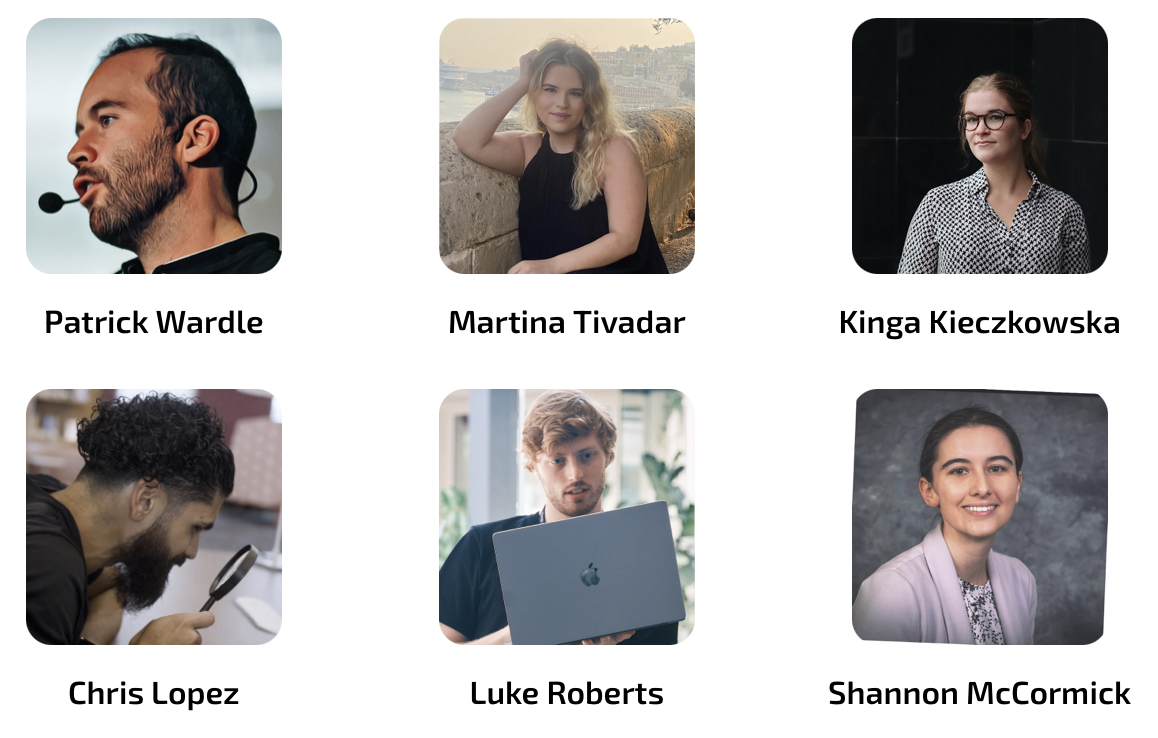

The trainers & speakers this time were (but not limited to):

As training, I did “How to Use LLMs to Detect macOS Malware” by Martina Tivadar (Research Assistant at iVerify).

For talks, the program was:

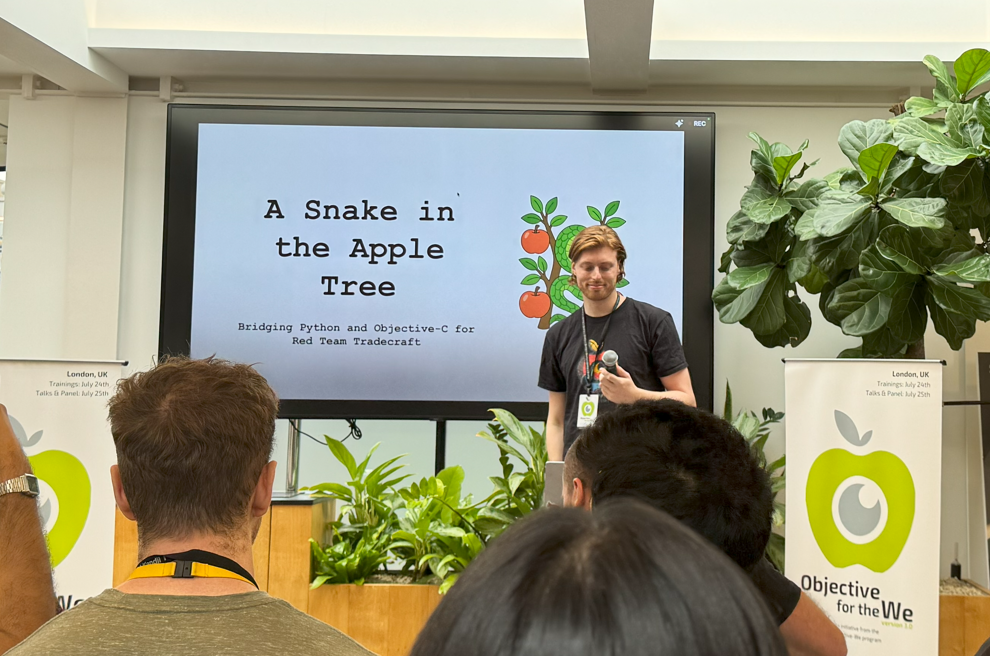

- A Snake in the Apple Tree: Bridging Python and Objective-C for Red Team Tradecraft - Luke Roberts (Senior Red Team Engineer, Github)

- How Far Can We Push AI for Detection - Martina Tivadar (iVerify)

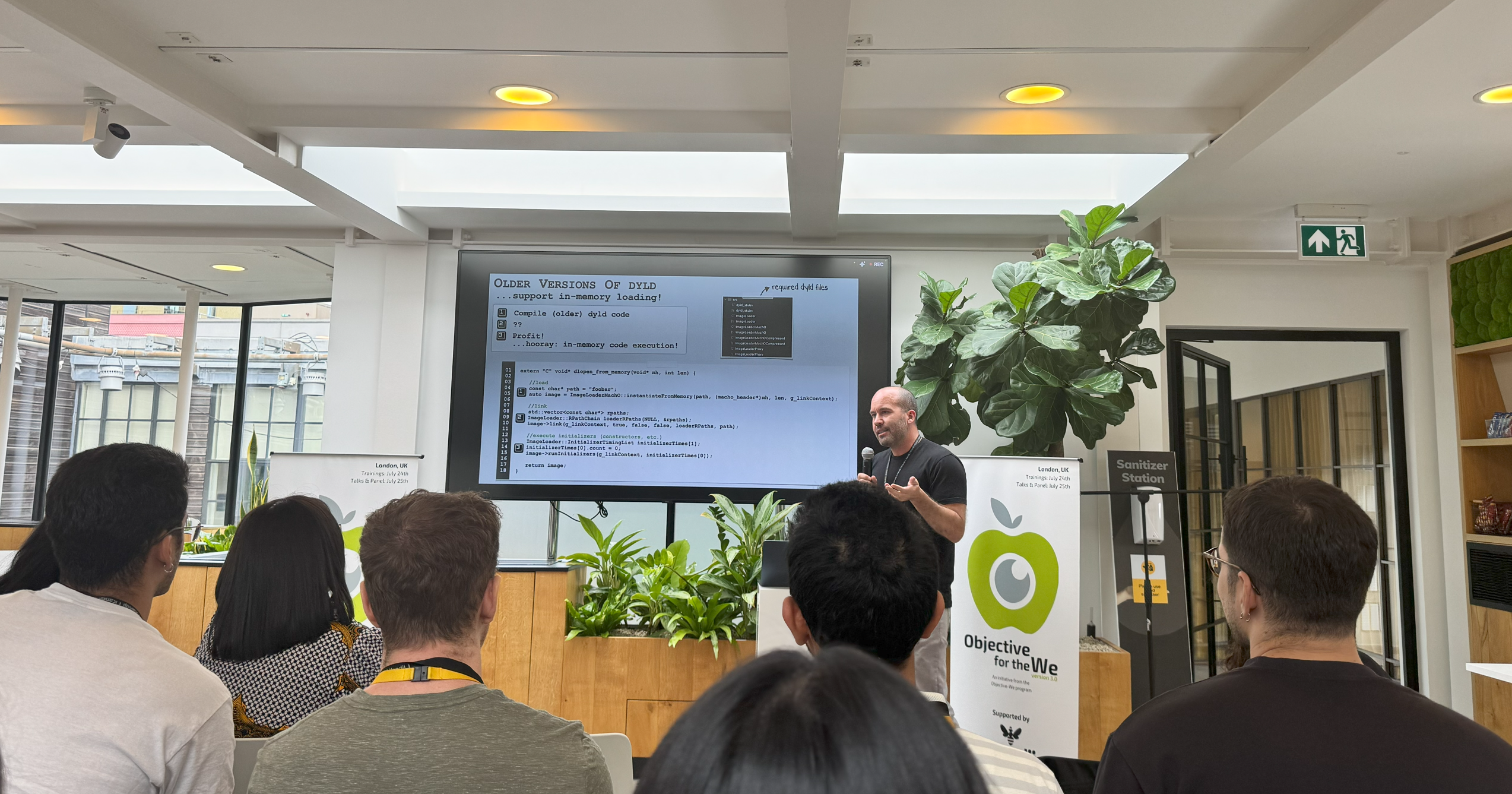

- Mirror Mirror: Restoring Reflective Code Loading on macOS - Patrick Wardle (Objective-See Foundation)

- From Alert to Action: Investigating a Real Security Incident - Shannon McCormick (Senior Incident Responder, Salesforce)

- Come Reverse Engineer Malware With Me - Chris Lopez (Senior macOS Security Researcher, Kandji)

- iPhone Forensics 101: Backups - Kinga Kieczkowska (Rada Cyber Security)

Day 1 - Training#

How to Use LLMs to Detect macOS Malware#

Introduction

Martina Tivadar, research assistant at iVerify showed us how large-language models (LLMs) can help in macOS malware detection.

During the training we used Python, Apple’s Endpoint Security framework and local running LLMs with LM Studio / OpenAI’s API to detect macOS malware.

What’s Apple’s Endpoint Security framework?

Like me, you might wonder what’s the Endpoint Security framework!

Apple’s Endpoint Security (ES) framework is a C-level API that lets a user-space program subscribe to a live stream of security-relevant events coming from the macOS kernel (process launches, file writes, mount operations, log-ins, XProtect scans, and ~100 more).

It sits inside the newer System Extension architecture, so you no longer need a fragile kernel extension to watch the system. Apple first previewed ES at WWDC 2019 and shipped it in macOS 10.15 Catalina; each macOS release since has expanded the event list and tooling.

You can watch Apple’s WWDC 2019 & 2020 videos and ressources if you want:

- Build an Endpoint Security app

- System Extensions and DriverKit

- Monitoring System Events with Endpoint Security

ES framework is very useful in a malware analysis / hunting context!

In fact, this allow us to “X-ray vision” into attacker tradecraft. Because ES delivers raw, timestamped data about every exec, mmap, file create, network open…, we can line up a chain of events that often spells out “persistence”, “lateral movement” etc. Thanks to its rich context, each event arrives in an es_message_t struct that already contains file metadata, code-signing info, and the full parent-process tree.

If you want to learn more about this I encourage you to read this ressources:

Now, let’s back to our training.

Manual way#

Here’s how we used manual way (local LLM) to detect macOS malware.

Lab 1. Collecting real macOS malware telemetry

Isolation first, we used a macOS VM, downloaded malware samples from objective-see/malware github repo, granted “Terminal” full-disk access, then removed the network interface.

After this, we ran eslogger with a broad event filter, executed the malware, and captured everything to events.json :

sudo eslogger exec create rename unlink tcc_modify open close write fork exit mount unmount signal kextload kextunload cs_invalidated proc_check > events.json

To exfiltrate the log we attached a detachable disk image, copied the file over, unmounted, synced, and mounted the image on the host.

Lab 2. Turning noisy telemetry into prompt-sized data

We wrote a Python script that truncated the log so the total context stayed under 40 000 tokens. In fact since we used local LLM models on LM Studio. Personally mine was running on a MacBook Air M2, so I had to limit myself to a small context window.

To truncate the logs we dropped some fields that add little semantic value.

Lab 3. Exploring prompt engineering with local models

Using LM-Studio we hosted different local models for testing, pointed the simple REST example at http://localhost:1234/v1/chat/completions, and tried six prompt styles:

- zero-shot

- one-shot

- few-shot

- chain-of-thought

- negative

- hybrid

At the end we saw that zero-shot hit almost perfect recall on the malware set (F1 ≈ 0.96), while chain-of-thought was a close second.

Automating everything in the cloud#

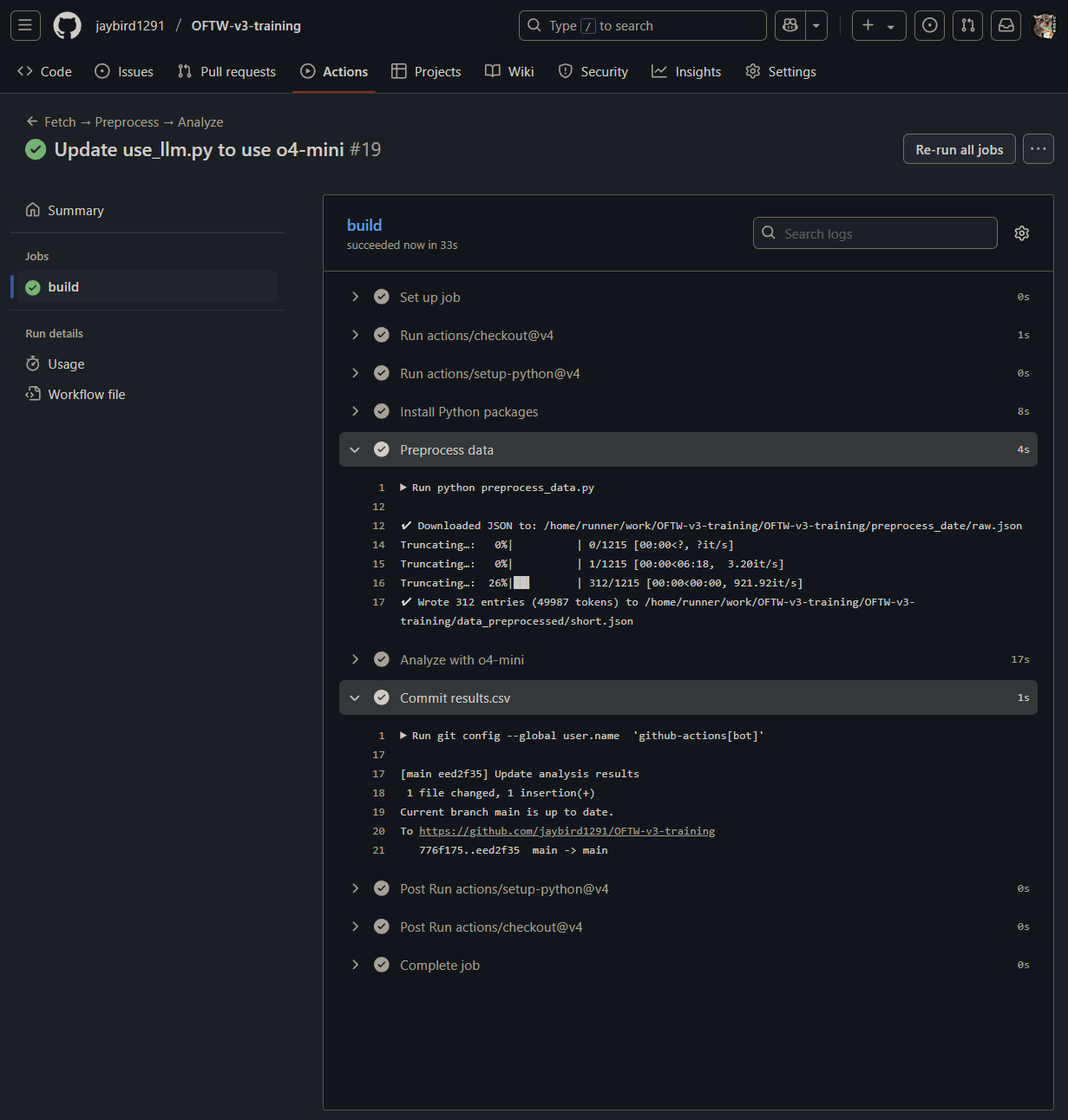

You can find the automation on my GitHub Action from my repo: https://github.com/jaybird1291/OFTW-v3-training/actions/runs/16553582347/job/46811889162

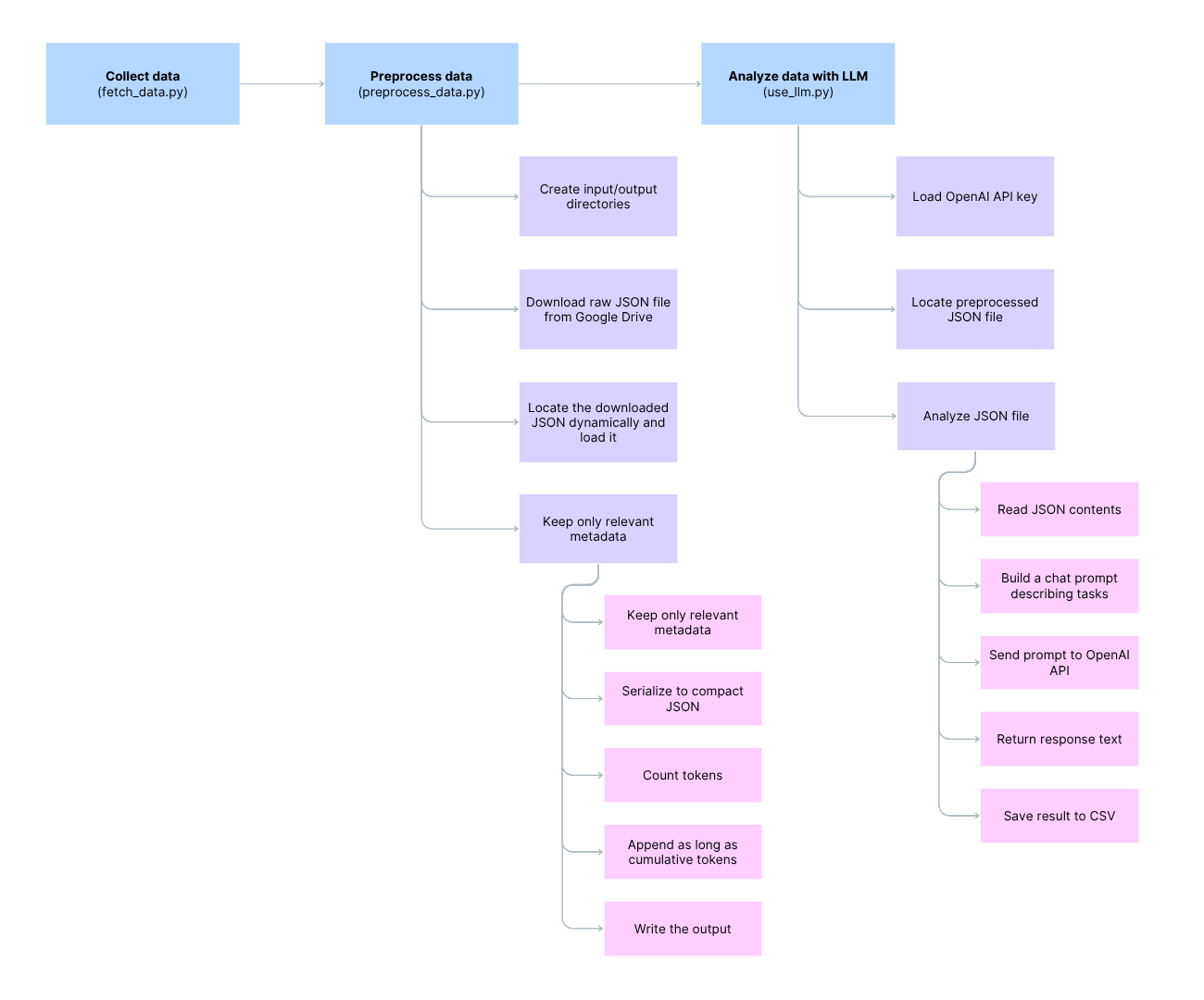

Here’s the preview of the final workflow using OpenAI API:

Setup

First of all we had to setup a GitHub repo with an OpenAI token as an actions secret.

GitHub Actions allow us to define one or more “workflows” in .github/workflows/*.yml. Each workflow lists events (push, PR, schedule) that trigger it. Inside a workflow there are jobs, and inside each job, a sequence of steps (runs commands etc.)

GitHub Actions are reusable steps. For example here it allows us to automatically collect the data, preprocess it, analyze it with OpenAI API etc.

You could replicate this in your own self-hosted CI/CD! That makes it very useful for professional use with on-premise infrastructure (in a SOC or incident-response context where you don’t want sensitive data to go outside your network for example).

Workflow

The workflow is pretty simple:

- Fetch the data

- Preprocess it

- Analyze it

name: Fetch → Preprocess → Analyze

on: push

permissions:

contents: write # allow the bot to commit results.csv

jobs:

build:

runs-on: ubuntu-latest

env:

OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }} # expose the secret to every step

steps:

# 1. Source code

- uses: actions/checkout@v4 # latest checkout action

# 2. Python runtime

- uses: actions/setup-python@v4

with:

python-version: "3.11" # use one interpreter for every script

# 3 Dependencies

- name: Install Python packages

run: |

python -m pip install --upgrade pip

pip install requests tqdm tiktoken openai

# 4. Download & truncate (writes data_preprocessed/short.json)

- name: Preprocess data

run: python preprocess_data.py

# 5. LLM analysis (reads ./data_preprocessed/short.json)

- name: Analyze with o4-mini

run: python use_llm.py

# 6. Commit the CSV (if it changed)

- name: Commit results.csv

run: |

git config --global user.name 'github-actions[bot]'

git config --global user.email 'github-actions[bot]@users.noreply.github.com'

git add results.csv

git commit -m "Update analysis results" || echo "Nothing to commit"

git pull --rebase

git push

Collecting and preprocessing data

The scripts fetch_data.py and preprocess_data.py allow us to download JSON logs generated from the ES framework from a Google Drive and preprocess them. The JSON includes events such as exec, create, rename, unlink, tcc_modify, open and others.

We preprocessed the logs by removing unnecessary columns, handling missing values, encoding categorical variables, performing feature engineering, scaling data and limiting the token size.

This part of the script preprocess_data.py is the most interesting! We only keep the fields that would be helpful to analyze and pivot:

- Process info :

pid,ppid, fullargv, code‑signing metadata, etc. - File events (

create,rename) : both paths plus POSIX metadata. - Exec events : target path and command‑line of the spawned process.

Everything else (counts, policy IDs, redundant flags) are dropped, shrinking the final JSON.

def prune_fields(item: dict) -> dict:

"""Return only the fields that are valuable for malware analysis."""

proc = item.get("process", {})

pr = {

"event_type": item.get("event_type"),

"time": item.get("time"),

# ---------- process ----------

"process": {

"pid": proc.get("pid"),

"ppid": proc.get("ppid"),

"start_time": proc.get("start_time"),

"argv": proc.get("arguments"), # full command‑line

"executable_path": proc.get("executable", {}).get("path"),

"uid": proc.get("audit_token", {}).get("uid"),

"euid": proc.get("audit_token", {}).get("euid"),

"gid": proc.get("audit_token", {}).get("gid"),

"signing_id": proc.get("signing_id"),

"cdhash": proc.get("cdhash"),

"team_id": proc.get("team_id"),

"is_platform_binary": proc.get("is_platform_binary"),

"image_uuid": proc.get("image_uuid"),

},

}

# ---------- file / fs events ----------

ev = item.get("event", {})

if "create" in ev:

dst = ev["create"].get("destination", {}).get("existing_file", {})

pr["event"] = {

"create": {

"destination_path": dst.get("path"),

"inode": dst.get("inode"),

"mode": dst.get("mode"),

"uid": dst.get("uid"),

"gid": dst.get("gid"),

}

}

elif "rename" in ev:

src = ev["rename"].get("source", {})

dst = ev["rename"].get("destination", {}).get("existing_file", {})

pr["event"] = {

"rename": {

"source_path": src.get("path"),

"destination_path": dst.get("path"),

"inode": dst.get("inode"),

"mode": dst.get("mode"),

"uid": dst.get("uid"),

"gid": dst.get("gid"),

}

}

elif "exec" in ev: # keep exec details too

ex = ev["exec"].get("process", {})

pr["event"] = {

"exec": {

"target_path": ex.get("executable", {}).get("path"),

"argv": ex.get("arguments"),

"cs_flags": ex.get("cs_flags"),

"signer_type": ex.get("signer_type"),

}

}

return pr

Analyzing data

To analyze the data we limit ourselves with o4-mini because of the cost. Here’s a basic comparaison of OpenAI models:

For my prompt I used a “chain-of-thought” because I simply prefer such style of prompts. Since the context window was pretty enormous, I gave the most precise context I could.

Here’s how it looks like:

def analyze_json(file_path: str, model: str = "o4-mini") -> str:

with open(file_path, "r", encoding="utf-8") as f:

data_str = f.read()

prompt = (

f"""YOUR PROMPT

Tasks:

1. Parse the input JSON array of EndpointSecurity (ES) events.

2. Identify sequences or individual events that plausibly indicate malware or post-exploitation behaviour.

3. Give recommendations, possible artefacts to check and retrieve and a check list for a deeper investigation by a dedicated team.

Context

- Event types present: exec, create, rename, unlink, tcc_modify, open, close, write, fork, exit, mount, unmount, signal, kextload, kextunload, cs_invalidated, proc_check.

- Typical malicious clues include:

- Unsigned or ad-hoc-signed binaries executed or kext-loaded.

- Exec / write / rename in temporary, hidden, or user-library paths (`/tmp`, `/var/folders`, `~/Library/*/LaunchAgents`, etc.).

- Rapid fork chains (“fork bombs”), unexpected `signal` storms, or `proc_check` failures.

- `tcc_modify` denying transparency-consent or privacy prompts.

- `mount` or `unmount` of disk images followed by `exec`.

- `cs_invalidated` on running code or `kextunload` immediately after `kextload`.

- Creation or unlinking of persistence files (LaunchAgents/Daemons, login hooks, cron, rc.plist).

- Treat developer tools, Apple-signed code, and items in `/Applications` as low-risk unless combined with other red flags.

- List of typical malware behaviour / IOC to rely on:

- Silver Sparrow style: LaunchAgent under ~/Library/Application Support/ with “agent_updater”-like name; DMG mount → exec chain; binary self-deletes.

- Shlayer style: “Flash Player” installer writes shell script to /private/tmp then launches via open; cleans up with unlink.

- XLoader style: hidden java-child process in ~/Library/Containers/... ; key-logging and clipboard read; persistence via user LaunchAgent.

- Adload style: ≥1 LaunchAgent **and** two LaunchDaemons, plus cron job; payload hidden in ~/Library/Application Support/<UUID>/<UUID>.

- MacStealer style: exfil files staged in /var/folders/*/T/* then zipped and POSTed, directory removed afterwards.

- TCC-bypass/ColdRoot: direct edits to TCC.db (tcc_modify) or cs_invalidated events on unsigned binaries touching privacy-protected resources.

- 2024 backdoors: unsigned bundle in user Library with innocuous icon; spawns reverse-shell child after 30-120 s sleep.

- Turtle (ransomware) pattern: burst of fork + write events (>500 files/min) followed by extension rename.

- LaunchAgent/LaunchDaemon persistence (MITRE T1543.001/.004): new *.plist in /Library/LaunchDaemons or ~/Library/LaunchAgents with RunAtLoad=true.

- Plist modification (MITRE T1647): sudden changes to Info.plist or LSEnvironment keys enabling hidden execution or dylib hijack.

{data_str}

"""

)

completion = client.chat.completions.create(

model=model,

messages=[

{"role": "system", "content": "You are an expert macOS incident-response analyst."},

{"role": "user", "content": prompt}

]

)

return completion.choices[0].message.content.strip()

Here’s how the GitHub action looks like:

And here’s o4-mini answer with ES logs from Atomic Stealer:

Analysis Results:

1. Suspicious execution chain

– 19:40:08 “Trading View” launched from /Volumes/Trading View (adhoc-signed, external volume)

– Immediately creates folder “521272586” under the user’s home and subdirs “Chromium”, “Chrome”

– Copies sensitive browser artefacts: Autofill0, Password1, Cookies2

– Spawns dscl (reads directory services), osascript, system_profiler (gathers system inventory)

– Writes “password-entered” and “login-keychain” files

– Zips the entire folder (ditto → 521272586.zip)

=> Classic “stealer” behavior: harvest browser credentials, system info, keychain hints, bundle and prepare for exfiltration.

2. Key artefacts to collect now

• /Volumes/Trading View/Trading View.app & associated helpers – check code signature, inspect binary for strings, sandbox escapes

• ~/521272586/ and ~/521272586.zip – exfiltrated payload, browser profiles, keychain dumps

• Chrome ~/Library/Application Support/Google/Chrome/Default/Cookies, Login Data, Local State

• Firefox ~/Library/Application Support/Firefox/Profiles/*.default*/cookies.sqlite, logins.json

• ~/Library/Keychains/login-keychain & password-entered file

• Shell history (~/.zsh_history) around 19:40:08

• /var/folders/*/T/com.apple.mobileassetd for MADAnalytics files

• /System/Library/AssetsV2/persisted/AutoControlManager & AutoAssetScheduler states

• TCC database (/Library/Application Support/com.apple.TCC/TCC.db) for unauthorized entries

3. Recommended triage steps

a. Isolate the host (block further network egress)

b. Acquire a full disk image and live memory

c. Extract and inspect the “Trading View” binary with strings, lldb, Hopper/IDA

d. Decompress 521272586.zip, examine stolen data, check for exfil scripts or hardcoded C2

e. Audit browser extensions, check for malicious injected code or stolen session tokens

f. Review launchd, cron, LaunchAgents/Daemons for persistence plist entries (~/Library/LaunchAgents, /Library/LaunchDaemons)

g. Check for unexpected network connections in /var/log/wifi.log, pf or firewall logs around 19:40

h. Search syslog and unified logs for any cs_invalidated, kextload, or tcc_modify events

4. Investigation checklist

1. Collect disk image & memory

2. Extract and validate “Trading View” code signature; compute hash against known malware DBs

3. Parse shell history for subsequent exfil commands

4. Recover browser data stolen; identify what exactly was grabbed

5. Analyze mobileassetd & chronod writes (may reveal other asset downloads or tracking)

6. Dump TCC.db and check consent grants for osascript or system_profiler

7. Review network sessions (packet captures, proxy logs) for POST of 521272586.zip

8. Hunt for persistence: new plist in ~/Library/LaunchAgents, ~/Library/LaunchDaemons, /etc/cron*

9. Audit kext, kernel messages for cs_invalidated or kextload/unload around activity

10. Elevate case to IR team for full forensic and IOC rollout

Day 2 - Talks#

For talks, I think the best is just for you to look at the slides and materials! In fact, I believe I just won’t add anything better haha.

I particularly liked Patrick Wardle’s and Shannon McCormick’s talks - personnal preference!

Here’s the slides I was able to get or find on internet:

Mirror Mirror: Restoring Reflective Code Loading on macOS - Patrick Wardle (Objective-See Foundation) :

From Alert to Action: Investigating a Real Security Incident - Shannon McCormick (Senior Incident Responder, Salesforce)

- link to download : files/Shannon-McCormick-Talk.pdf

How Far Can We Push AI for Detection - Martina Tivadar (iVerify)

- not exactly this one but close : https://www.youtube.com/watch?v=cU9Az43D2Ic + https://objectivebythesea.org/v7/talks/OBTS_v7_mTivadar.pdf

iPhone Forensics 101: Backups - Kinga Kieczkowska (Rada Cyber Security)

Here’s also some pic of the talks, the place was really nice btw!